Walkthrough

This walkthrough will guide you through a basic installation of the Oracle AI Optimizer and Toolkit (the AI Optimizer). It will allow you to experiment with GenAI, using Retrieval-Augmented Generation (RAG) with Oracle Database 23ai at the core.

By the end of the walkthrough you will be familiar with:

- Configuring a Large Language Model (LLM)

- Configuring an Embedding Model

- Configuring the Vector Storage

- Splitting, Embedding, and Storing vectors

- Configuring SelectAI

- Experimenting with the AI Optimizer

What you’ll need for the walkthrough:

- Internet Access (docker.io and container-registry.oracle.com)

- Access to an environment where you can run container images (Podman or Docker).

- 100G of free disk space.

- 12G of usable memory.

- Sufficient GPU/CPU resources to run the LLM, embedding model, and database (see below).

Performance: A Word of Caution

The performance will vary depending on the infrastructure.

LLMs and Embedding Models are designed to use GPUs, but this walkthrough can work on machines with just CPUs; albeit much slower! When testing the LLM, if you don’t get a response in a couple of minutes; your hardware is not sufficient to continue with the walkthrough.

Installation

Same… but Different

The walkthrough will reference podman commands. If applicable to your environment, podman can be substituted with docker.

If you are using docker, make the walkthrough easier by aliasing the podman command:

alias podman=docker.

You will run four container images to establish the “Infrastructure”:

- On-Premises LLM - llama3.1

- On-Premises Embedding Model - mxbai-embed-large

- Vector Storage/SelectAI - Oracle Database 23ai Free

- The AI Optimizer

LLM - llama3.1

To enable the ChatBot functionality, access to a LLM is required. The walkthrough will use Ollama to run the llama3.1 LLM.

Start the Ollama container:

The Container Runtime is native:

podman run -d --gpus=all -v ollama:$HOME/.ollama -p 11434:11434 --name ollama docker.io/ollama/ollamaThe Container Runtime is backed by a virtual machine. The VM should be started with 12G memory and 100G disk space allocated.

podman run -d -e OLLAMA_NUM_PARALLEL=1 -v ollama:$HOME/.ollama -p 11434:11434 --name ollama docker.io/ollama/ollamaNote: AI Runners like Ollama, LM Studio, etc. will not utilize Apple Silicon’s “Metal” GPU when running in a container. This may change as the landscape evolves.

You can install and run Ollama natively outside a container and it will take advantage of the “Metal” GPU. Later in the Walkthrough, when configuring the models, the API URL for the Ollama model will be your hosts IP address.

The Container Runtime is backed by a virtual machine. The VM should be started with 12G memory and 100G disk space allocated.

podman run -d --gpus=all -v ollama:$HOME/.ollama -p 11434:11434 --name ollama docker.io/ollama/ollamaNote: AI Runners like Ollama, LM Studio, etc. will not utilize non-NVIDIA GPUs when running in a container. This may change as the landscape evolves.

You can install and run Ollama natively outside a container and it will take advantage of non-NVIDIA GPU. Later in the Walkthrough, when configuring the models, the API URL for the Ollama model will be your hosts IP address.

Pull the LLM into the container:

podman exec -it ollama ollama pull llama3.1Test the LLM:

Performance: Fail Fast…

Unfortunately, if the below

curldoes not respond within 5-10 minutes, the rest of the walkthrough will be unbearable. If this is the case, please consider using different hardware.curl http://127.0.0.1:11434/api/generate -d '{ "model": "llama3.1", "prompt": "Why is the sky blue?", "stream": false }'

Embedding - mxbai-embed-large

To enable the RAG functionality, access to an embedding model is required. The walkthrough will use Ollama to run the mxbai-embed-large embedding model.

Pull the embedding model into the container:

podman exec -it ollama ollama pull mxbai-embed-large

The AI Optimizer

The AI Optimizer provides an easy to use front-end for experimenting with LLM parameters and RAG.

Download and Unzip the latest release of the AI Optimizer:

curl -LO https://github.com/oracle-samples/ai-optimizer/releases/latest/download/ai-optimizer-src.tar.gzmkdir ai-optimizertar zxf ai-optimizer-src.tar.gz -C ai-optimizerBuild the Container Image

cd ai-optimizer podman build --arch amd64 -t localhost/ai-optimizer-aio:latest .Start the AI Optimizer:

podman run -d --name ai-optimizer-aio --network=host localhost/ai-optimizer-aio:latest

Vector Storage - Oracle Database 23ai Free

AI Vector Search in Oracle Database 23ai provides the ability to store and query private business data using a natural language interface. The AI Optimizer uses these capabilities to provide more accurate and relevant LLM responses via Retrieval-Augmented Generation (RAG). Oracle Database 23ai Free provides an ideal, no-cost vector store for this walkthrough.

To start Oracle Database 23ai Free:

Start the container:

podman run -d --name ai-optimizer-db -p 1521:1521 container-registry.oracle.com/database/free:latestAlter the

vector_memory_sizeparameter and create a new database user:podman exec -it ai-optimizer-db sqlplus '/ as sysdba'alter system set vector_memory_size=512M scope=spfile; alter session set container=FREEPDB1; CREATE USER "WALKTHROUGH" IDENTIFIED BY OrA_41_OpTIMIZER DEFAULT TABLESPACE "USERS" TEMPORARY TABLESPACE "TEMP"; GRANT "DB_DEVELOPER_ROLE" TO "WALKTHROUGH"; ALTER USER "WALKTHROUGH" DEFAULT ROLE ALL; ALTER USER "WALKTHROUGH" QUOTA UNLIMITED ON USERS; EXIT;Bounce the database for the

vector_memory_sizeto take effect:podman container restart ai-optimizer-db

Configuration

Operating System specific instructions:

If you are running on a remote host, you may need to allow access to the 8501 port.

For example, in Oracle Linux 8/9 with firewalld:

firewall-cmd --zone=public --add-port=8501/tcpAs the container is running in a VM, a port-forward is required from the localhost to the Podman VM:

podman machine ssh -- -N -L 8501:localhost:8501With the “Infrastructure” in-place, you’re ready to configure the AI Optimizer.

In a web browser, navigate to http://localhost:8501:

Notice that there are no language models configured to use. Let’s start the configuration.

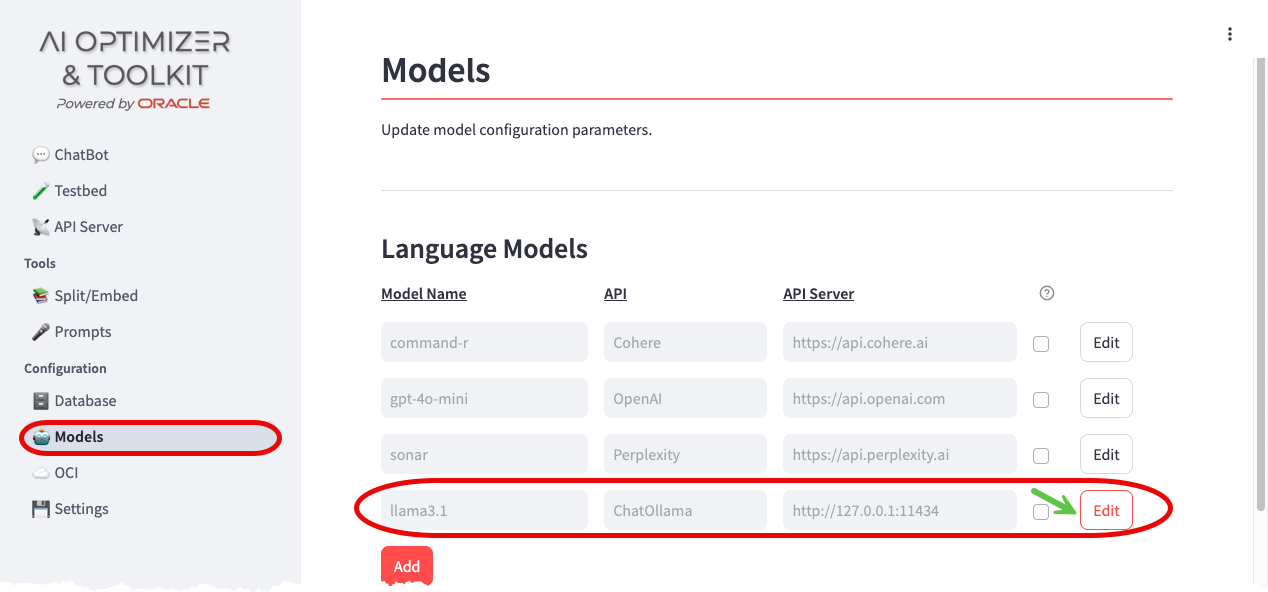

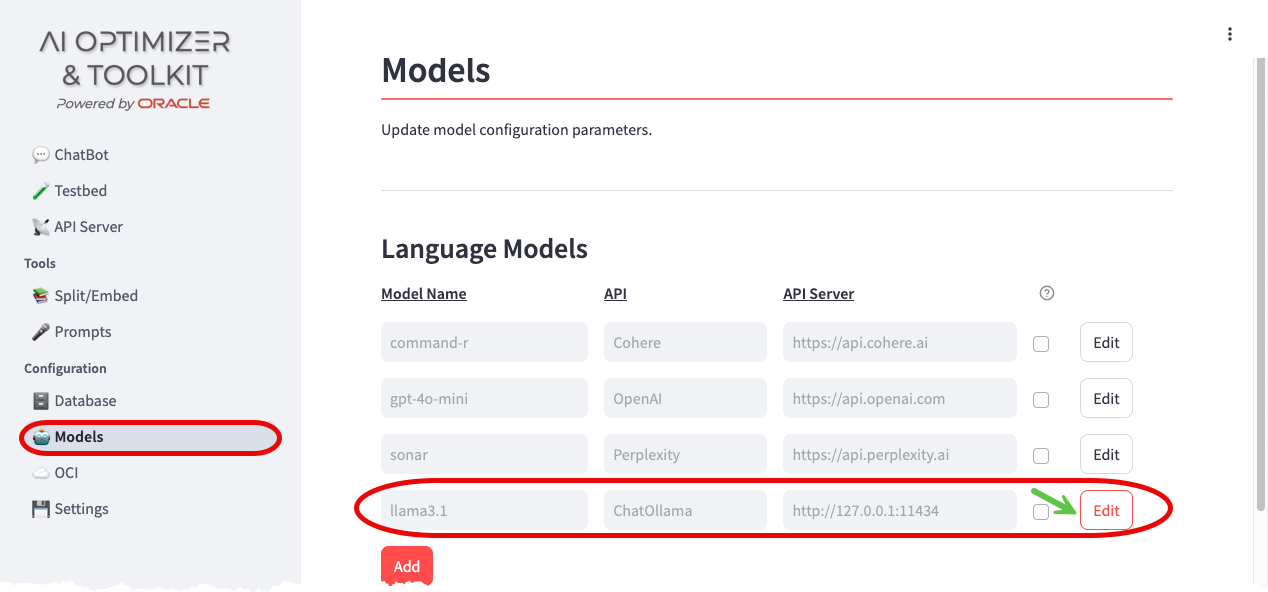

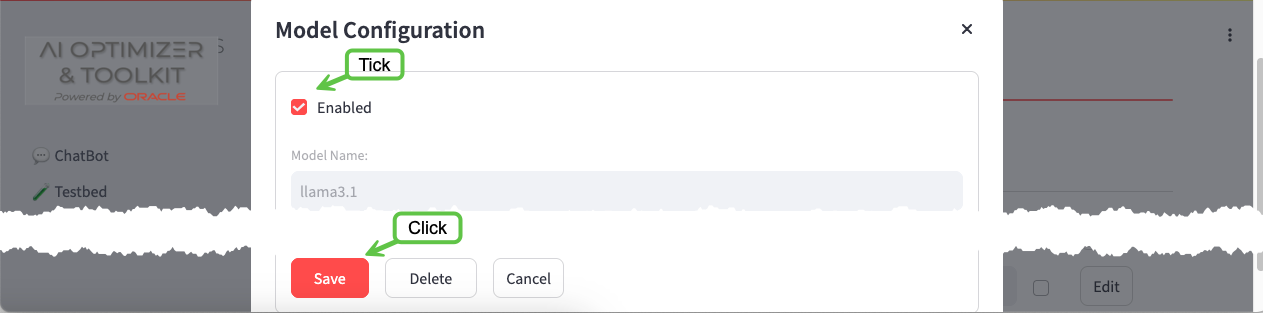

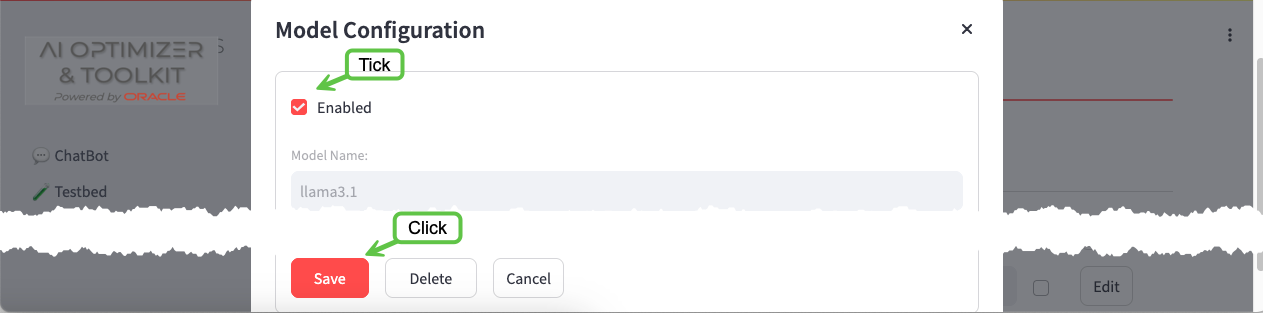

Configure the LLM

To configure the On-Premises LLM, navigate to the Configuration -> Models screen:

- Enable the

llama3.1model that you pulled earlier by clicking the Edit button

- Tick the Enabled checkbox, leave all other settings as-is, and Save

More information about configuring LLMs can be found in the Model Configuration documentation.

More information about configuring LLMs can be found in the Model Configuration documentation.

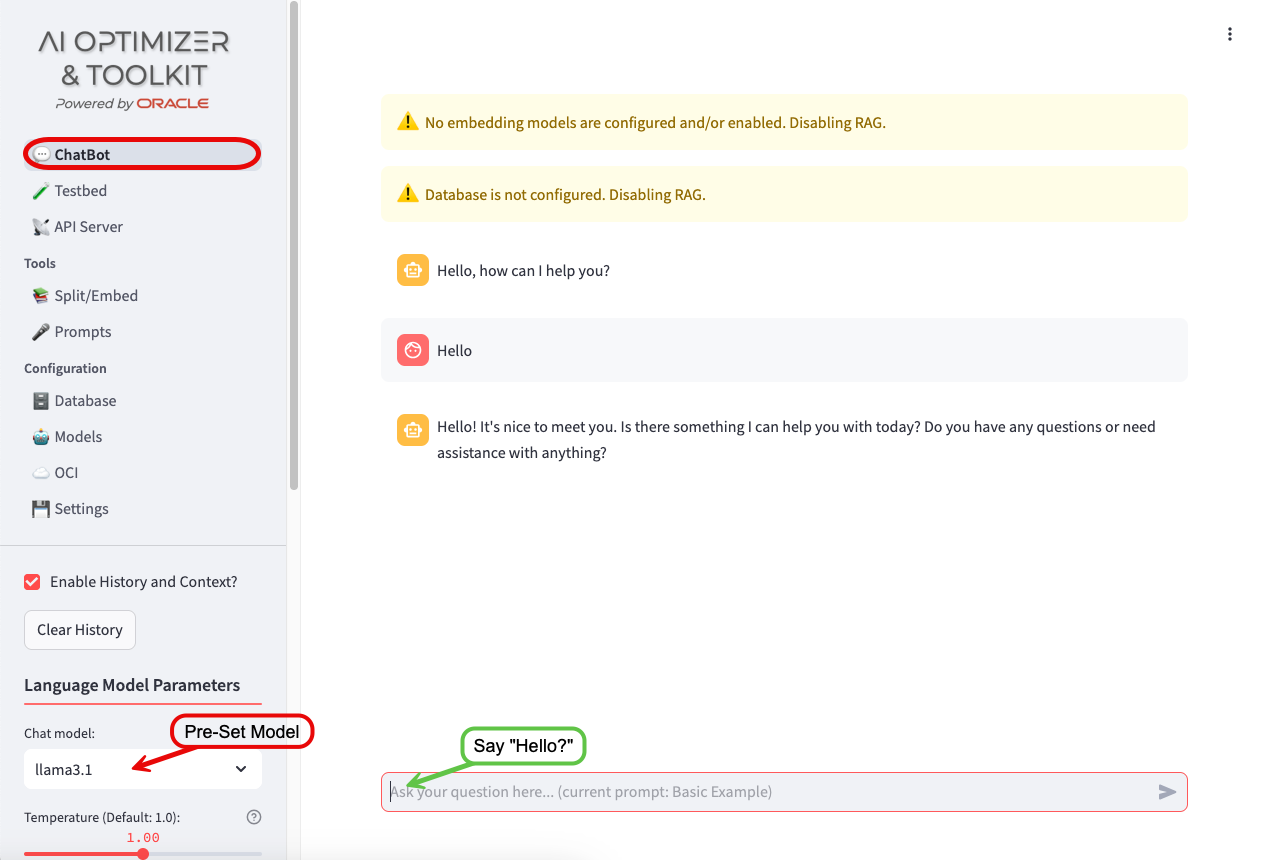

Say “Hello?”

Navigate to the ChatBot screen:

The error about language models will have disappeared, but there are new warnings about embedding models and the database. You’ll take care of those in the next steps.

The Chat model: will have been pre-set to the only enabled LLM (llama3.1) and a dialog box to interact with the LLM will be ready for input.

Feel free to play around with the different LLM Parameters, hovering over the icons to get more information on what they do.

You’ll come back to the ChatBot later to experiment further.

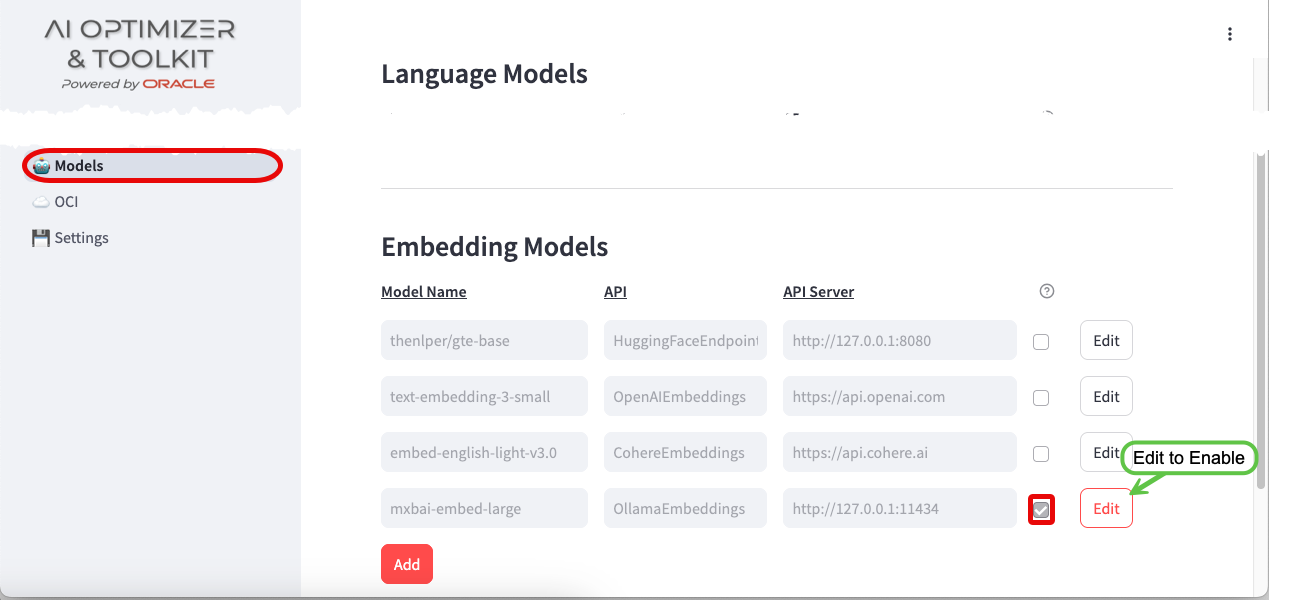

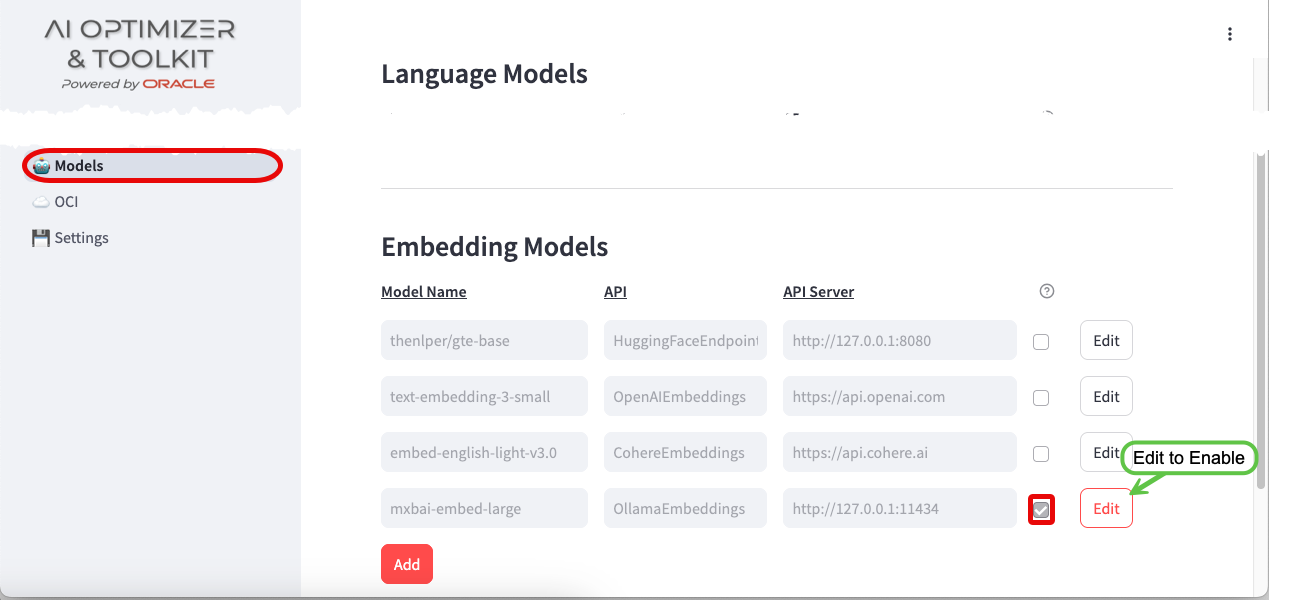

Configure the Embedding Model

To configure the On-Premises Embedding Model, navigate back to the Configuration -> Models screen:

- Enable the

mxbai-embed-largeEmbedding Model following the same process as you did for the Language Model.

More information about configuring embedding models can be found in the Model Configuration documentation.

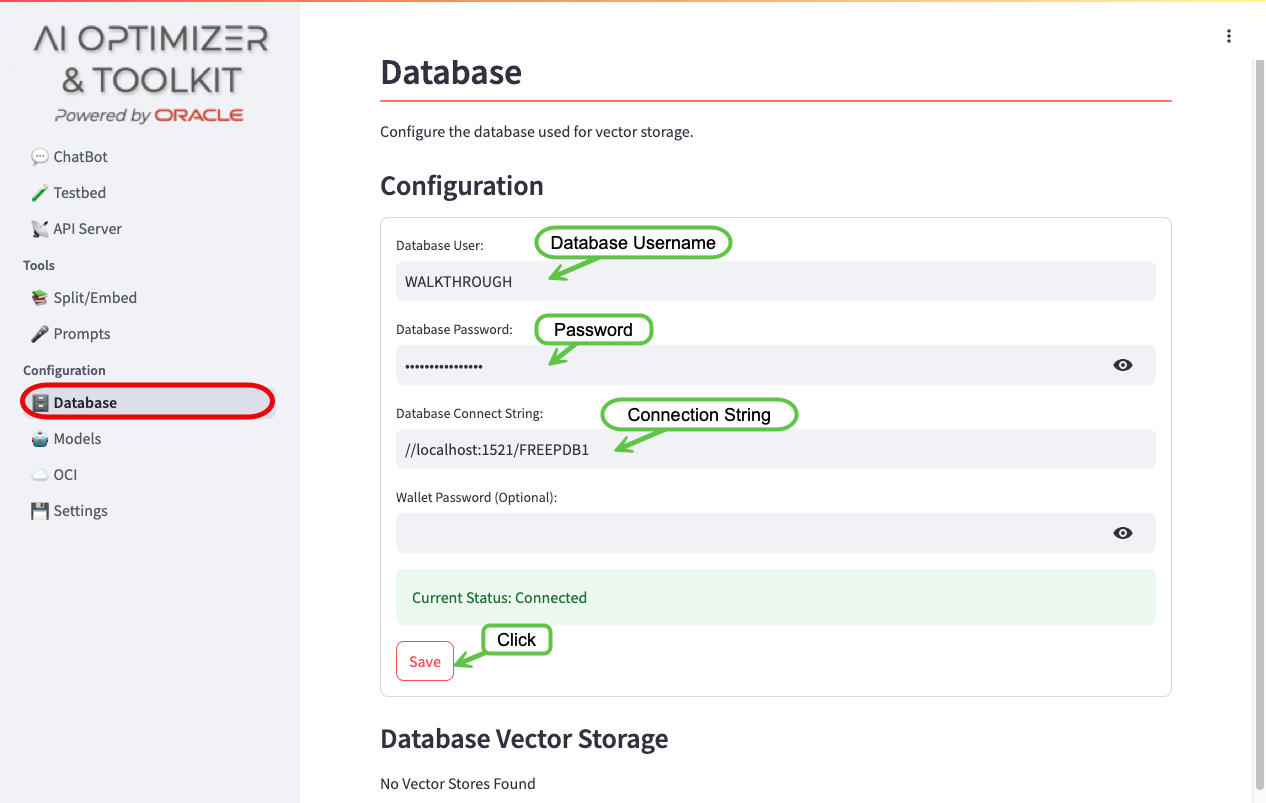

Configure the Database

To configure Oracle Database 23ai Free, navigate to the Configuration -> Database screen:

- Enter the Database Username:

WALKTHROUGH - Enter the Database Password for the database user:

OrA_41_OpTIMIZER - Enter the Database Connection String:

//localhost:1521/FREEPDB1 - Save

More information about configuring the database can be found in the Database Configuration documentation.

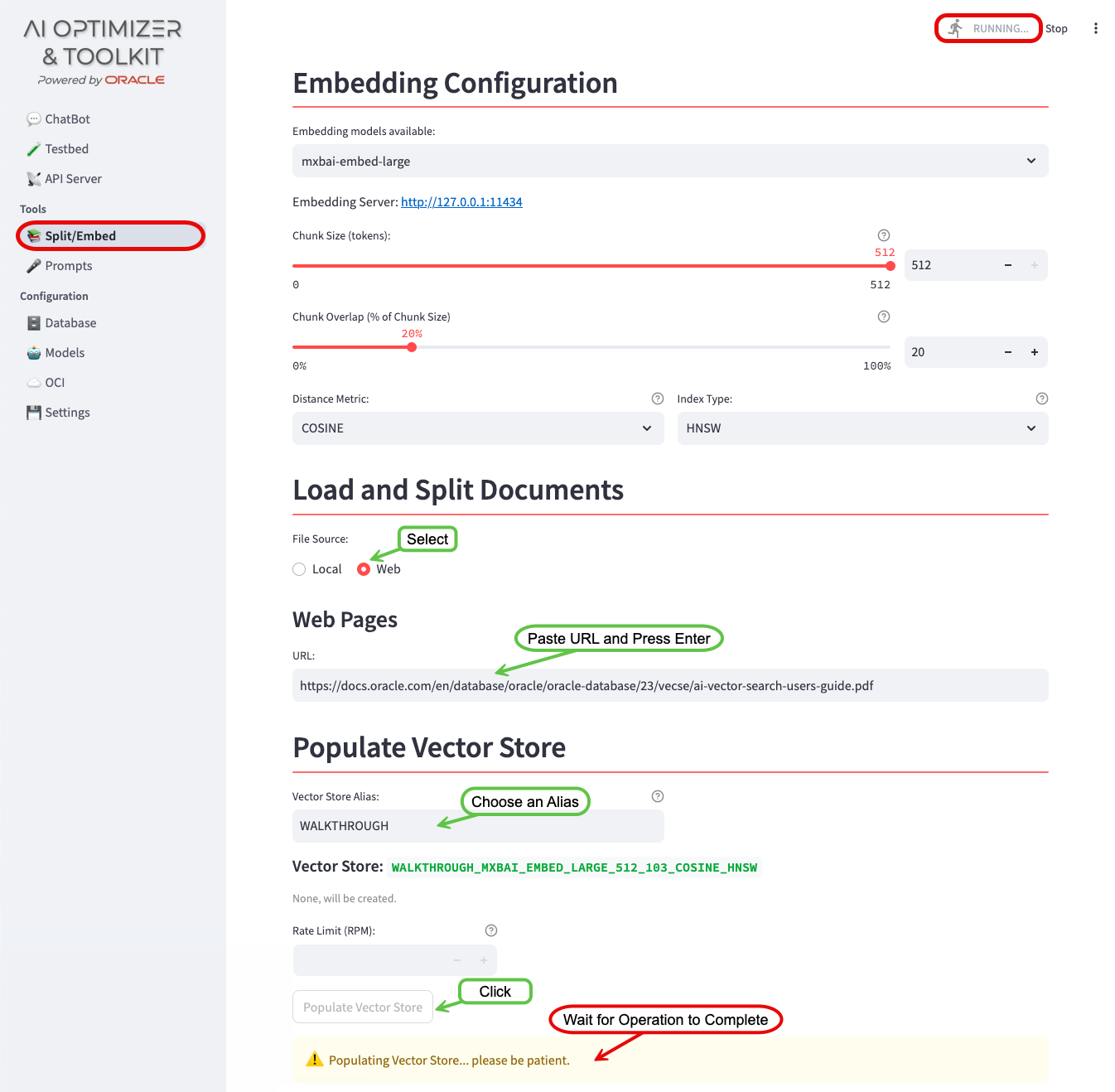

Split and Embed

With the embedding model and database configured, you can now split and embed documents for use in Vector Search.

Navigate to the Split/Embed Screen:

- Change the File Source to

Web - Enter the URL:

https://docs.oracle.com/en/engineered-systems/health-diagnostics/autonomous-health-framework/ahfug/oracle-autonomous-health-framework-users-guide.pdf - Press Enter

- Give the Vector Store an Alias:

WALKTHROUGH - Click Load, Split, and Populate Vector Store

- Please be patient…

Performance: Grab a beverage of your choosing…

Depending on the infrastructure, the embedding process can take a few minutes. As long as the “RUNNING” dialog in the top-right corner is moving… it’s working.

Thumb Twiddling

You can watch the progress of the embedding by streaming the server logs: podman exec -it ai-optimizer-aio tail -f /app/apiserver_8000.log

Chunks are processed in batches. Wait until the logs output: POST ... HTTP/1.1" 200 OK before continuing.

Query the Vector Store

After the splitting and embedding process completes, you can query the Vector Store to see the chunked and embedded document:

From the command line:

Connect to the Oracle Database 23ai Database:

podman exec -it ai-optimizer-db sqlplus 'WALKTHROUGH/OrA_41_OpTIMIZER@FREEPDB1'Query the Vector Store:

select * from WALKTHROUGH.WALKTHROUGH_MXBAI_EMBED_LARGE_512_103_COSINE_HNSW;

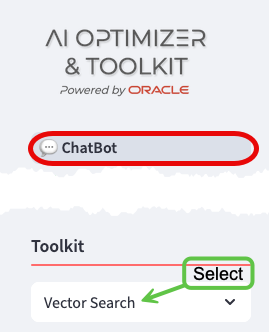

Experiment with Vector Search

With the AI Optimizer configured, you’re ready for some experimentation.

Navigate back to the ChatBot.

For this guided experiment, perform the following:

- Ask the ChatBot:

In Oracle Database 23ai, how do I use AHF?

Responses may vary, but generally the ChatBot’s response will be inaccurate, including:

- Not understanding that 23ai is an Oracle Database release. This is known as knowledge-cutoff.

- Suggestions that AFH has to do with a non-existant Hybrid Feature and running

emclicommands. These are hallucinations.

Now select “Vector Search” in the Toolkit options and simply ask: Are you sure?

Performance: Host Overload…

With RAG enabled, all the services (LLM/Embedding Models and Database) are being utilized simultaneously:

- The LLM is rephrasing “Are you sure?” into a query that takes into account the conversation history and context

- The embedding model is being used to convert the rephrased query into vectors for a similarity search

- The database is being queried for documentation chunks similar to the rephrased query (AI Vector Search)

- The LLM is grading the relevancy of the documents retrieved against the query

- The LLM is completing its response using the documents from the database (if the documents are relevant)

Depending on your hardware, this may cause the response to be significantly delayed.

By asking Are you sure?, you are taking advantage of the AI Optimizer’s history and context functionality.

The response should be different and include references to Cluster Health Advisor and links to the embedded documentation where this information can be found. It might even include an apology!

What’s Next?

You should now have a solid foundation in utilizing the AI Optimizer. To take your experiments to the next level, consider exploring these additional bits of functionality:

- Turn On/Off/Clear history

- Experiment with different Large Language Models (LLMs) and Embedding Models

- Tweak LLM parameters, including Temperature and Penalties, to fine-tune model performance

- Investigate various strategies for splitting and embedding text data, such as adjusting chunk-sizes, overlaps, and distance metrics

Clean Up

To cleanup the walkthrough “Infrastructure”, stop and remove the containers.

podman container rm ai-optimizer-db --force

podman container rm ai-optimizer-aio --force

podman container rm ollama --force