🤖 Model Configuration

Supported Models

At a minimum, a Large Language Model (LLM) must be configured in Oracle AI Microservices Sandbox for basic functionality. For Retrieval-Augmented Generation (RAG), an Embedding Model will also need to be configured.

10-Sept-2024: Documentation In-Progress…

If there is a specific model that you would like to use with the Oracle AI Microservices Sandbox, please open an issue in GitHub.

| Model | Type | API | On-Premises |

|---|---|---|---|

| llama3.1 | LLM | ChatOllama | X |

| gpt-3.5-turbo | LLM | OpenAI | |

| gpt-4o-mini | LLM | OpenAI | |

| gpt-4 | LLM | OpenAI | |

| gpt-4o | LLM | OpenAI | |

| llama-3-sonar-small-128k-chat | LLM | ChatPerplexity | |

| llama-3-sonar-small-128k-online | LLM | ChatPerplexity | |

| command-r | LLM | Cohere | |

| mxbai-embed-large | Embed | OllamaEmbeddings | |

| nomic-embed-text | Embed | OllamaEmbeddings | X |

| all-minilm | Embed | OllamaEmbeddings | X |

| thenlper/gte-base | Embed | HuggingFaceEndpointEmbeddings | X |

| text-embedding-3-small | Embed | OpenAIEmbeddings | |

| text-embedding-3-large | Embed | OpenAIEmbeddings | |

| embed-english-v3.0 | Embed | CohereEmbeddings | |

| embed-english-light-v3.0 | Embed | CohereEmbeddings |

Configuration

The models can either be configured using environment variables or through the Sandbox interface. To configure models through environment variables, please read the Additional Information about the specific model you would like to configure.

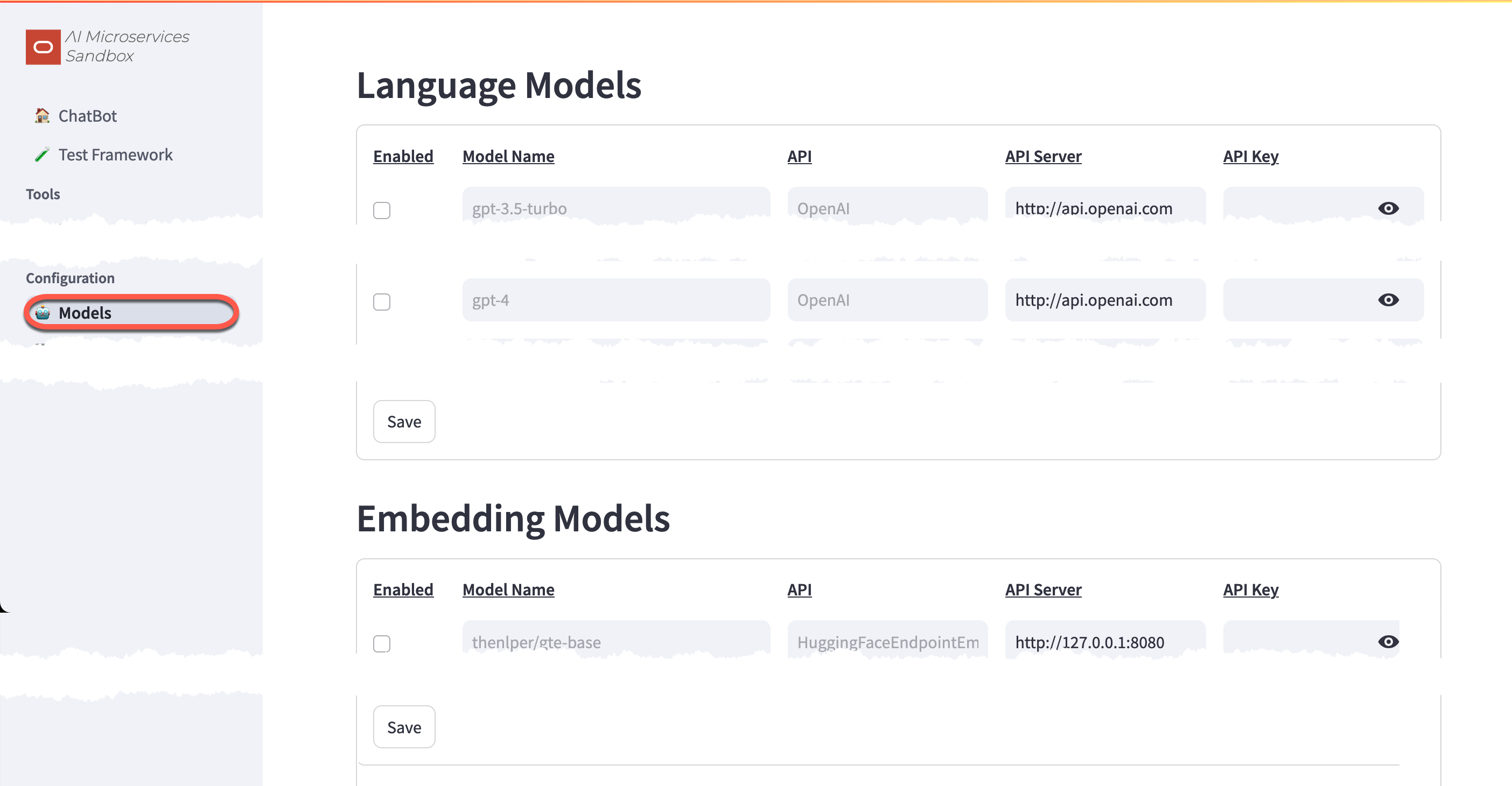

To configure an LLM or embedding model from the Sandbox, navigate to Configuration -> Models:

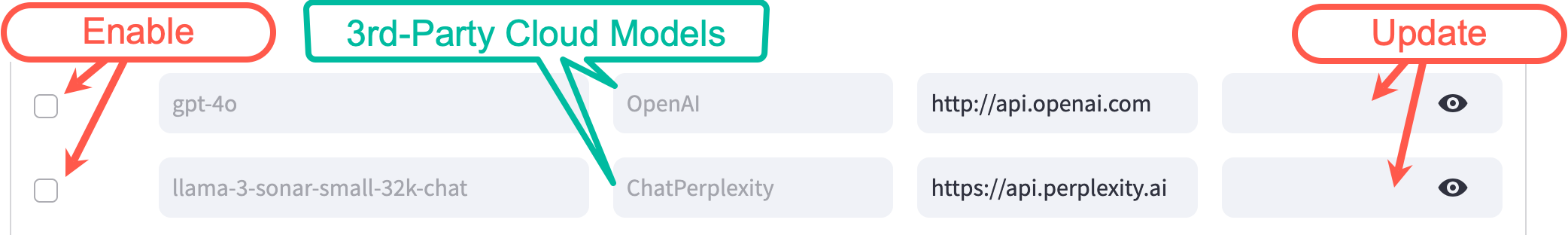

Here you can configure both Large Language Models and Embedding Models. Set the API Keys and API URL as required. You can also Enable and Disable models.

API Keys

Third-Party cloud models, such as OpenAI and Perplexity AI, require API Keys. These keys are tied to registered, funded accounts on these platforms. For more information on creating an account, funding it, and generating API Keys for third-party cloud models, please visit their respective sites.

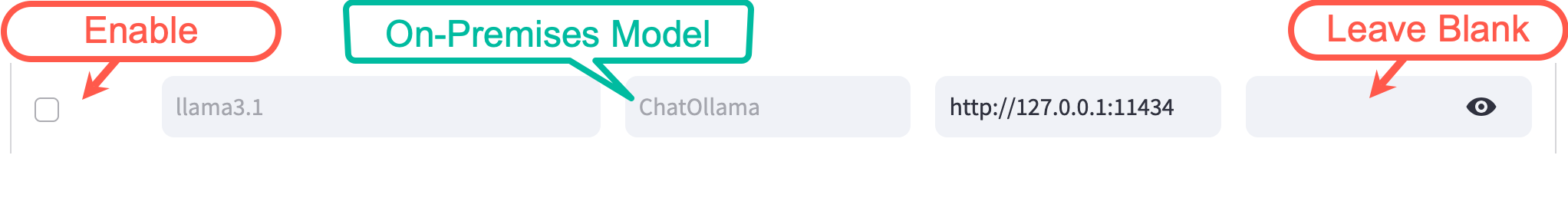

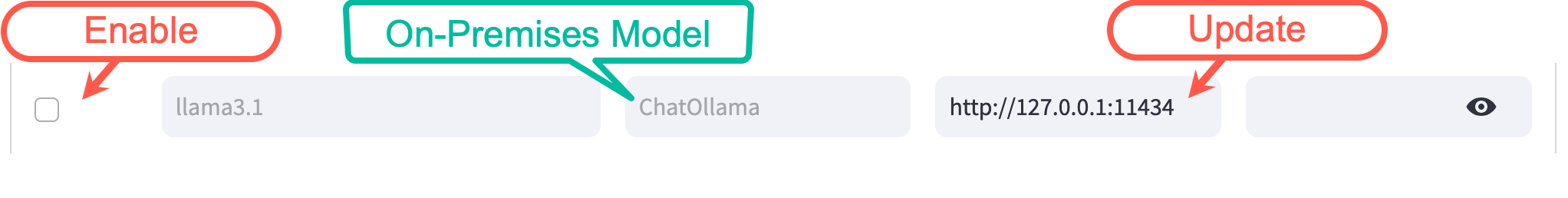

On-Premises models, such as those from Ollama or HuggingFace usually do not require API Keys. These values can be left blank.

API URL

When using an on-premises model, for performance purposes, they should be running on hosts with GPUs. As the Sandbox does not require GPUs, often is the case that the API URL for these models will be the IP or hostname address of a remote host. Specify the API URL and Port of the remote host.

Additional Information

Ollama

Ollama is an open-source project that simplifies the running of LLMs and Embedding Models On-Premises.

When configuring an Ollama model in the Sandbox, set the API Server URL (e.g http://127.0.0.1:11434) and leave the API Key blank. Substitute the IP Address with the IP of where Ollama is running.

Skip the GUI!

You can set the following environment variable to automatically set the API Server URL and enable Ollama models (change the IP address and Port, as applicable to your environment):

export ON_PREM_OLLAMA_URL=http://127.0.0.1:11434Quick-start

Example of running llama3.1 on a Linux host:

- Install Ollama:

sudo curl -fsSL https://ollama.com/install.sh | sh- Pull the llama3.1 model:

ollama pull llama3.1- Start Ollama

ollama serveFor more information and instructions on running Ollama on other platforms, please visit the Ollama GitHub Repository.

HuggingFace

HuggingFace is a platform where the machine learning community collaborates on models, datasets, and applications. It provides a large selection of models that can be run both in the cloud and On-Premises.

Skip the GUI!

You can set the following environment variable to automatically set the API Server URL and enable HuggingFace models (change the IP address and Port, as applicable to your environment):

:

export ON_PREM_HF_URL=http://127.0.0.1:8080Quick-start

Example of running thenlper/gte-base in a container:

Set the Model based on CPU or GPU

For CPUs:

export HF_IMAGE=ghcr.io/huggingface/text-embeddings-inference:cpu-1.2For GPUs:export HF_IMAGE=ghcr.io/huggingface/text-embeddings-inference:0.6Define a Temporary Volume

export TMP_VOLUME=/tmp/hf_data mkdir -p $TMP_VOLUMEDefine the Model

export HF_MODEL=thenlper/gte-baseStart the Container

podman run -d -p 8080:80 -v $TMP_VOLUME:/data --name hftei-gte-base \ --pull always ${image} --model-id $HF_MODEL --max-client-batch-size 5024Determine the IP

docker inspect hftei-gte-base | grep IPANOTE: if there is no IP, use 127.0.0.1

Cohere

Cohere is an AI-powered answer engine. To use Cohere, you will need to sign-up and provide the Sandbox an API Key. Cohere offers a free-trial, rate-limited API Key.

WARNING: Cohere is a cloud model and you should familiarize yourself with their Privacy Policies if using it to experiment with private, sensitive data in the Sandbox.

Skip the GUI!

You can set the following environment variable to automatically set the API Key and enable Perplexity models:

:

export COHERE_API_KEY=<super-secret API Key>OpenAI

OpenAI is an AI research organization behind the popular, online ChatGPT chatbot. To use OpenAI models, you will need to sign-up, purchase credits, and provide the Sandbox an API Key.

WARNING: OpenAI is a cloud model and you should familiarize yourself with their Privacy Policies if using it to experiment with private, sensitive data in the Sandbox.

Skip the GUI!

You can set the following environment variable to automatically set the API Key and enable OpenAI models:

:

export OPENAI_API_KEY=<super-secret API Key>Perplexity AI

Perplexity AI is an AI-powered answer engine. To use Perplexity AI models, you will need to sign-up, purchase credits, and provide the Sandbox an API Key.

WARNING: Perplexity AI is a cloud model and you should familiarize yourself with their Privacy Policies if using it to experiment with private, sensitive data in the Sandbox.

Skip the GUI!

You can set the following environment variable to automatically set the API Key and enable Perplexity models:

:

export PPLX_API_KEY=<super-secret API Key>